What is Oracle Data Pump Bundle Patch?

Data Pump Bundle Patches are the Proactive patches for Datapump. Oracle generally doesn't include the Bug fixes for Datapump regular Database Bundle Release updates but are instead, Oracle is releasing the Data pump bug fixes separately as Datapump proactive patches. These patch bundles include performance and stability fixes for Datapump. The patches are only available on DB versions 19.10 or higher.

One major benefit of these patches is that the application of

data pump bundle patches will see a significant performance improvement for the datapump.

We can refer the below document for downloading the latest Data pump Bundle patches.

Data Pump Recommended Proactive Patches For 19.10 and Above (Doc ID 2819284.1)

Lets see how we can apply the Data Pump Bundle Patch in our 19c RAC environment.

Source Environment setup :

RAC Database : LABDBPR ( labdbpr1 and labdbpr2)

GRID Home : /u01/app/19.3.0.0/grid

RDBMS Home : /u01/app/oracle/product/19.3.0.0/dbhome_1

Version : Oracle Database 19c EE - Production Version 19.17.0.0.0

hosts : oraprim01 & oraprim02

[oracle@oraprim01 software]$ $ORACLE_HOME/OPatch/opatch lspatches | grep "Database Release Update"

34419443;Database Release Update : 19.17.0.0.221018 (34419443)

[oracle@oraprim01 software]$

1) Download and unzip the patch (p34734035_1917000DBRU_Generic.zip) in staging area on all the nodes of the cluster.

Node 1:

[oracle@oraprim01 software]$ ls -ltr

total 2304

drwxr-xr-x. 4 oracle oinstall 48 Nov 21 02:52 34734035

-rw-rw-r--. 1 oracle oinstall 26780 Dec 7 06:11 PatchSearch.xml

-rwxr-x---. 1 oracle dba 2328024 Dec 16 07:47 p34734035_1917000DBRU_Generic.zip

[oracle@oraprim01 software]$

2) ensure that that below perquisites are met.

2a) Ensure that 19 Release 19.17.0.0.221018DBRU Patch Set Update (PSU) 34419443 is already applied on the Oracle Database.

2b) Ensure that the Oracle home on which you are installing the patch or from which you are rolling back the patch is Oracle Database 19 Release 19.17.0.0.221018DBRU.

2c) Ensure that you have OPatch 19 Release 12.2.0.1.32 or higher. Oracle recommends that you use the latest version available for 19 Release 12.2.0.1.32.

2d) Determine whether any currently installed interim patches conflict with this patch 34734035 as shown below:

lets check for the confict in our environments.

export ORACLE_HOME=/u01/app/oracle/product/19.3.0.0/dbhome_1

export PATH=$ORACLE_HOME/bin:$PATH

$ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -ph ./

Node1:

Node2:

we can see that there no patches that are conflicting with this patch (34734035 ) that we are going to apply.

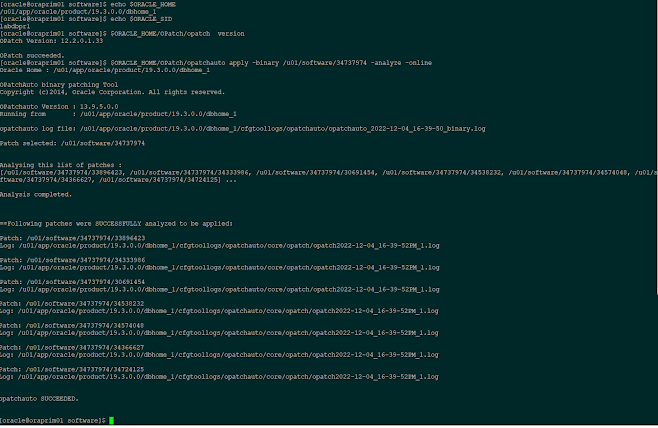

3) Apply the patch.

For a RAC environment, shut down all the services (database, ASM, listeners, nodeapps, and CRS daemons) running

from the Oracle home of the node you want to patch. After you patch this node, start the services on this node. we can stop the database instance on node1 using srvctl stop instance command. [oracle@oraprim01 34734035]$ srvctl status database -d labdbpr

Instance labdbpr1 is running on node oraprim01

Instance labdbpr2 is running on node oraprim02

[oracle@oraprim01 34734035]$ srvctl stop instance -d labdbpr -n oraprim01

[oracle@oraprim01 34734035]$ srvctl status database -d labdbpr

Instance labdbpr1 is not running on node oraprim01

Instance labdbpr2 is running on node oraprim02

[oracle@oraprim01 34734035]$Apply the patch on this node

Start the database on this node

[oracle@oraprim01 34734035]$ srvctl status database -d labdbpr

Instance labdbpr1 is not running on node oraprim01

Instance labdbpr2 is running on node oraprim02

[oracle@oraprim01 34734035]$ srvctl start instance -d labdbpr -n oraprim01

[oracle@oraprim01 34734035]$ srvctl status database -d labdbpr

Instance labdbpr1 is running on node oraprim01

Instance labdbpr2 is running on node oraprim02

[oracle@oraprim01 34734035]$4) Stop the database instance on node 2 and apply the patch.

5) Post Patch Installation steps.

6a) stop the database instance on the node where we are rolling back the patch.

6c) start the database/services from the node

The following are the bugs fixed by this patch:

24338134: DBMS_METADATA.GET_DDL GENERATES INCORRECT DDL FOR TABLE WITH USING INDEX CLAUSE

25143018: TRANSFORM=DISABLE_ARCHIVE_LOGGING:Y NOT HONORED LOB SEGMENTS

26565187: DATA PUMP EXPORT PERFORMANCE IS MUCH SLOWER IN 12.2

26995275: MISSING ALTER ANY SEQUENCE GRANT IN THE DATAPUMP_IMP_FULL_DATABASE ROLE

27679005: CARTESIAN PRODUCT IN INDEX COMPOSITE PARTITION DURING EXPORT.

28318139: ORA-31003 ERROR WHEN IMPORTING FULL DATABASE IN PARALLEL

28357349: SCHEMA LEVEL EXPORT/IMPORT CHANGES VIRTUAL COLUMN DEFINATION

28485837: MOVE CHUNK: FAILS WITH ORA-00955 WHEN UNIQUE INDEX AND CONSTRAINT IS CREATED

28555193: DBMS_METADATA.GET_DDL CAPTURE INCORRECT STORAGE OPTIONS OF THE XML COLUMN ON GTT

28640918: SLOW PARSE ON SQL FOR TRANSPORTABLE TABLESPACES

28771564: DATAPUMP EXPORT INVOKED BY A PRIVILEGE USER EXECUTES A QUERY FOR V$OPEN_CURSOR

28990738: 12.2 DBMS_METADATA.GET_DDL IS SLOW DUE TO SLOW ACCESS ON DICTIONARY VIEWS

29276889: ATP-D: DATA PUMP IMPORT FROM ATP-D INSTANCE TO A LOCAL DB INSTANCE FAILS

29284458: ORA-1426 DBMS_METADATA REFERENCE PARTITIONS HIGHER NUMBER

29450761: CANT IMPORT INTO AN EXISTING TABLE WITH EXTRA COLUMNS WHEN ENCRYPTION USED

29500928: IMPDP DIFFERENT BEHAVIOUR FOR IDENTITY COLUMN WHEN IMPORT ALL VERSUS METADATA_ONLY

29543605: 18.4 ADWC - ORA-39242: UNABLE TO EXPORT/IMPORT "LONG RAW" DATA TYPE

29613245: ORA-31684 ORA-39112 WITH FIX 28539085 AND VERSION=11.2

29792959: IMPDP WITH TRANSFORM=DISABLE_ARCHIVE_LOGGING:Y GENERATES ARCHIVES

29959025: EXPDP RUNNING LONG TIME QUERYING KU$_SUBPARTITION_EST_VIEW WHEN PROCESSING TABLE_DATA

30155338: POSSIBLE DEADLOCK/TIMEOUT ERRORS DURING PARALLEL IMPORT WITH TABLE_EXISTS_ACTION=REPLACE

30157766: ORA-21560 DBMS_METADATA.FETCH_DDL IN 19C NOT IN 12.2

30191952: TTS FAILS WITH ORA-01422 ON V$ENCRYPTION_WALLET

30430932: DBMS_METADATA NOT DISPLAYING THE SEMICOLON AND SLASH FOR TYPE SPECIFICATIONS

30582819: REMAP TABLESPACE IS NOT CONSIDERED FOR LOCAL TEMPORARY TABLESPACE DURING IMPDP

30662417: IMPDP WORKER TERMINATED WITH ORA-39029 AFTER MULTIPLE ORA-01775

30763851: IMPDP 11.2 TO 18C OR HIGHER HITS ORA-904 WHEN TABLES HAVE EXTENDED STATISTICS

30822078: IMPDP VERY SLOW DUE TO PROCESS REORDERING

30858671: 18C DBMS_METADATA.GET_DDL FAILED WITH ORA-16000 IN READ ONLY MODE

30928455: DATA PUMP EXPORT | HITTING ORA-31637 WHILE RUNNING DATA PUMP-DPLOAD CONCURRENCY TEST IN SAME PDB

30944402: SELECT FROM MASTER TABLE RUNS SLOW DURING TABLE_DATA EXPORT WHEN THERE ARE MANY SUBPARTITIONS

30978304: ORA-20000 DURING IMPDP WITH STATS AND THE UNIQUE INDEX FOR THE PK IS NOT CREATED

31008542: DPUMP IMPORT FAILS WITH ORA-39083:TABLE:AUDSYS.AMGT$DP$AUD$UNIFIED

31031133: SIMULTANEOUS DATA PUMP JOB CREATION FAILS DUE TO ATOMICITY WINDOW

31050896: PARALLEL DATAPUMP SLOW ON CONSTRAINTS

31174337: DBMS_METADATA.GET_DDL GENERATES NO KEYWORDS FOR NOT COMPRESSED INDEXES

31191614: TTS EXPDP QUERIES V$ENCRYPTED_TABLESPACES FOR EVERY TBS SLOWING DOWN PERFORMANCE

31200854: ADB-D: IMPORT PERFORMANCE OF PACKAGE_BODY

31393386: SPIN-OFF OF BUG# 31317961 FOR PARTIAL BACKOUT OF BUG# 27403988 FROM MAIN LABEL

31402031: DBMS_METADATA_UTIL THROWS AN INVALID CURSOR EXCEPTION.

31412130: ADBD:: COMPLETE FIX FOR 29543605 WHICH INCLUDES ALL THE MISSING FILES

31424070: APPSST19C: XTTS PDB - TABLE IMPORT/CREATION FAILED WITH ORA-39083 ORA-14334

31711479: ADB-S: ORA39126 AND ORA01031 WHILE IMPORT USING FA FULL DUMP INTO ADB-S

31725941: TOTAL ESTIMATION USING BLOCKS METHOD IS MISSING STARTING WITH 12.2

31830685: ZDM : IMPORT ADW-S DB LINK MIGRATION THROWS INTERNAL ERROR

31976738: DATAPUMP IMP FAILURE- ORA-39844: BAD STREAM FORMAT DETECTED: [KLAPRS_15] [31] [60] [4] [1]

32077706: ORA-60 FOR MASTER TABLE WHEN INHERITED COMPRESSION TABLESPACE SETTING

32096059: IMPDP TO 19C USING EXPORT DUMP OF 11.2.0.4 HANGS WITH ENQ: TM - CONTENTION

32206227: PDB IS IN MIGRATE STATUS AFTER DBCLI UPGRADE FROM 12.1.0.2 TO 19.8.0.0.0

32370367: EXPDP IN 19.7 THREE TIMES SLOWER THAN IT WAS IN 11.2.0.4

32452792: DBMS_METADATA.GET_DDL GETS WRONG OUTPUT FROM 12.2.0.1. TESTED TILL 19.3.0.0

32512780: PROCOBJ PLSQL SCRIPTS ARE NOT EXCLUDED ON IMPORT WITH EXCLUDE=TAG

32647307: ADB-D:: PACKAGE BODIES IMPORT SLOWER AFTER AUTONOMOUS REFRESH TO 19.10DBRU

32731035: ATPD MIGRATION:ORA-04021: TIMEOUT OCCURRED WHILE WAITING TO LOCK OBJECT

33163877: ATPD MIGRATION:IMPDP HITS TABLE OR VIEW DOES NOT EXIST ON SOME DATAPUMP RELATED TABLES

33204663: TCH19C :: ORA-39139: DATA PUMP DOES NOT SUPPORT XMLTYPE OBJECTS WHEN DOING XTTS WITH BINARY XML STORAGE

33297599: UNUSED XMLTYPE/CLOB COLUMNS CAUSE IMPORT FAILURE

33346378: REWRITE DATA PUMP PATCH LOCKING TEST: TKDPATCHRAC.TSC

33448450: TCH19C :: ORA-01647: TABLESPACE 'APPS_TS_TX_DATA' IS READ-ONLY, CANNOT ALLOCATE SPACE

33470563: METADATA API FAILS TO RECOGNIZE TAB CHARACTER AS DELIMITER WHEN PARSING SOURCE LINES OF TYPE OBJECT

33498804: DATAPUMP IMPORT IGNORES EXCLUDE AND INCLUDE VALUES FOR TAGS FOR IMPORT CALLOUTS

33517865: Fix for Bug 33517865

33526315: OCI FADEV: IMPORT ERROR - PLS-00201: IDENTIFIER 'SYS.DBMS_AQADM' MUST BE DECLARED

33660169: CONSOLIDATED BUG FOR DATA PUMP AQ FIXES 31338354, 31844376, 31868443 FOR 19.10 AND LATER

33720650: TCH19C :: OCI-21500: INTERNAL ERROR CODE [QMCXDGETQNAMEINFO2], [14003] IN XMLTYPE CLOUMN TYPE

33731212: SELF DEADLOCK ON PACKAGE SYS.DBMS_TTS DURING 19.13 + ONEOFF'S DATAPATCH RUN ..

33735435: TRACKING BUG FOR COMBO OF 32759991 32878145 32919937 32984678 (REPLACEMENT FOR MINI MLR 33407604)

34052641: END_PLUGTS_BLK OBJECT TYPE MISSING FROM FULL TTS EXPORT WHEN INCLUDE SPECIFIED

34174611: OCI FADEV: IMPDP CRASH AS AQ DEPENDENT TABLES DIDN'T GET EXPORTED IN TABLE MODE

34457840: MISSING SYS.DBMS_AQ_IMP_INTERNAL.IMPORT_QUEUE_TABLE CALL FROM DATAPUMP IMPORT

34525626: TRACKING BUG TO MERGE 33599275 AND 33498804 SO CAN BE BACKPORTED TOGETHER TO 19.16

34600787: GET_DDL FAILS TO GENERATE TYPE DEFINITION WHEN NOT EVOLVED AND HAS TRAILING BLANK LINE

34710181: BUG FIX FOR 34119289 ADDS XML STRUCTURE REF THAT DOESN'T EXIST IN 11.2 CAUSING TEST FAILURES

Thank you

Sambaiah Sammeta