Oracle Grid Rolling Upgrade from 18c to 19c.

We can use this document to upgrade grid infrastructure from 18c to 19c in rolling fashion.

In this post, We will be upgrading oracle 18c to 19.8.0.0 (19c with July,2020 BP). lets check out the steps.

Preupgrade checks : Below are the prequisites that we need to check before we plan for the grid upgrade.

1) Check if /u01 has enough free space.

It is advised to have minimum of 30GB to 50GB free space in /u01.

2) Patches to be applied before upgrading 19c.

You can check all the below doc to get the list of all the patches that you

need to applied before upgrading to 19c.

Patches to apply before upgrading Oracle GI and DB to 19c or downgrading to previous release (Doc ID 2539751.1)

Check if patch 28553832 is already applied to Grid home. If its

not applied, please apply it.

This patch is required on 18c grid home to upgrade it to 19c.

[oracle@node1

~]$ . oranev

[oracle@node1 ~]$ +ASM

[oracle@node1 ~]$ $ORACLE_HOME/OPatch/opatch lsinv | grep 28553832 28540666, 28544237, 28545898, 28553832, 28558622, 28562244, 28567875

4) Set SGA in ASM to minimum supported value.

It is necessary to have at

least 3GB for SGA to correctly upgrade (and runs) the GI 19c version.

sqlplus

/ as sysasm

SQL> show parameter sga_max_size

SQL> show parameter sga_target

Set

them if they are not at least of 3Gb

SYS@+ASM1>

alter system set sga_max_size = 3G scope=spfile sid='*';

SYS@+ASM1> alter system set sga_target = 3G scope=spfile sid='*';

5) Verify no active rebalance is running

SYS@+ASM1>

select count(*) from gv$asm_operation;

COUNT(*)

----------

0

6) Download the required software’s and stage them on first node

of the cluster.

a)

Download from Oracle Software Delivery Cloud (https://edelivery.oracle.com)

GRID Software: V982068-01.zip ( Oracle

Database Grid Infrastructure 19.3.0.0.0" for Linux x86-64)

b)

Latest 19c Grid Infrastructure Release Update (RU), July 2020 RU - Patch 31305339: GI

RELEASE UPDATE 19.8.0.0.0

c) Latest OPatch release, Patch 6880880, for 19.x and all other database versions used.

-

p6880880_190000_Linux-x86-64.zip

- https://updates.oracle.com/download/6880880.html

d) Copy the software’s to

/export/Sam/19c-Grid/

7) Create the required directories

As

root user on Node 1

mkdir -p

/u01/app/19.0.0.0/grid

If

it’s a Exadata machine, you can use dcli command to create directories in all

the nodes in one shot.

dcli -l root

-g /root/dbs_group mkdir -p /u01/app/19.0.0.0/grid

dcli -l root -g /root/dbs_group chown oracle:oinstall

/u01/app/19.0.0.0/grid

8) Extract the Grid software.

The

19c Grid Software is extracted directly to the Grid Home. The grid runInstaller

option is no longer supported. Run the following command on the database server

where the software is staged.

As

Grid user on node 1

unzip -q

/export/Sam/19c-Grid/V982068-01.zip -d /u01/app/19.0.0.0/grid

9) Run the Cluster verification utility.

As

Grid user on node 1.

cd

/u01/app/19.0.0.0/grid/

unset ORACLE_HOME ORACLE_BASE ORACLE_SID

$

./runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome

/u01/app/18.0.0.0/grid -dest_crshome /home/19.0.0.0/grid -dest_version

19.8.0.0.0 -fixup -verbose

When issues are discovered after running Cluster Verification Utility (CVU) , a runfixup.sh script is generated in /tmp/CVU_19.0.0.0.0_grid directory. Please be aware this script makes changes to your environment. You will be given the opportunity to run this later in the section "Actions to take before executing gridSetup.sh on each database server".

10) ACFS filesystem

If

you have some mountpoint over ACFS, it is recommended to stop it “safely”

before executing the gridsetup.

This occurs because during the upgrade phase the script will try to shutdown the entire clusters in the node,

and

if the unmount of ACFS goes wrong you will receive an error (and this can be

boring and stressful to handle).

[oracle@Node1

~]$ $ORACLE_HOME/bin/crsctl stat res -t |grep acfs

ora.datac1.acfsvol01.acfs

ONLINE

ONLINE Node1

mounted on /acfs01,S

ONLINE ONLINE Node2

mounted on /acfs01,S

ONLINE ONLINE Node3

mounted on /acfs01,S

ONLINE ONLINE Node4

mounted on /acfs01,S

ora.datac1.ghchkpt.acfs

[oracle@Node1 ~]$ $ORACLE_HOME/bin/crsctl stat res -w "TYPE =

ora.acfs.type" -p | grep VOLUME

AUX_VOLUMES=CANONICAL_VOLUME_DEVICE=/dev/asm/acfsvol01-311

VOLUME_DEVICE=/dev/asm/acfsvol01-311

Check and

Stop the acfs files system

/u01/app/18.0.0/grid/bin/srvctl

stop filesystem -d =/dev/asm/acfsvol01-311 -n Node1

/u01/app/18.0.0/grid/bin/srvctl stop filesystem -d =/dev/asm/acfsvol01-311 -n

Node2

/u01/app/18.0.0/grid/bin/srvctl stop filesystem -d =/dev/asm/acfsvol01-311 -n

Node3

/u01/app/18.0.0/grid/bin/srvctl stop filesystem -d =/dev/asm/acfsvol01-311 -n

Node4

11) Put the cluster in Blackout in OEM and comment out any Cron

jobs which might sent alerts

12) Prepare applying 2020 July RU to the 19c Grid home on node1

This is something to take a note. It is possible to patch the new GI even before install the 19c GI . It is recommended by the way. This is a cool update from oracle as it saves time. If you are working on 8 node cluster, it will save your effort and time for patching in all the nodes as when you update grid with gridSetup.sh with -applyRU , it will patch the existing home first and then it invokes GUI and it copies the patched version to all the other nodes.

a) Unzip the July, 2020 RU

cd

/export/Sam/19c-Grid/

unzip p31305339_190000_Linux-x86-64.zip

ls -ltr

grid@node1 +ASM1]$ ls -l

total 232

drwxr-x--- 7 grid oinstall 4096 Oct 9 17:11 31305339

drwxr-x--- 14 grid oinstall 4096 Jan 24 15:23 OPatch-ORG

-rw-rw-r-- 1 grid oinstall 225499 Oct 15 13:24 PatchSearch.xml

b) Copy the latest Opatch to new GRID home.

As

Root on Node1,

cd

/u01/app/19.0.0.0/grid

mv OPatch OPatch.orig.date

cp /export/Sam/19c-Grid/p6880880_190000_Linux-x86-64.zip .

unzip p6880880_190000_Linux-x86-64.zip

chown -Rf oracle:oinstall OPatch

chmod 755 OPatch

13) Grid Upgrade :

Run gridSetup.sh with -applyRU option to

apply the patch and start the grid as below.

The installation log is located at

/u01/app/oraInventory/logs. For OUI

installations or execution of critical scripts it is recommend to use VNC or

SCREEN to avoid problems in case connection with the server is lost.

Perform these instructions as the Grid Infrastructure software owner

(which is grid in this document) to install the 19c Grid Infrastructure

software and upgrade Oracle Clusterware and ASM from 12.1.0.2, 12.2.0.1,

18.1.0.0 to 19c. The upgrade begins with Oracle Clusterware and ASM running and

is performed in a rolling fashion. The upgrade process manages stopping and

starting Oracle Clusterware and ASM and making the new 19c Grid Infrastructure

Home the active Grid Infrastructure Home.

For systems with a standby database in place this step can be performed either before, at the same time or after extracting the Grid image file on the primary system.

applyRU: This will apply the Release Update when passed as

parameter BEFORE start to install the 19c GI.

- mgmtDB parameters: With 19c the MGMTDB it is not needed anymore, these parameters disable the installation and even the options are not shown during the graphical installation.

[grid@Node1 +ASM1]$

[grid@Node1 +ASM1]$ unset ORACLE_HOME

[grid@Node1 +ASM1]$ unset ORACLE_BASE

[grid@Node1 +ASM1]$ unset ORACLE_SID

[grid@Node1 ]$

[grid@Node1 ]$ cd /u01/app/19.0.0.0/grid/

[grid@Node1 ]$

[grid@Node1 ]$ export DISPLAY=xx.xx.xxx.xx:1.0

[grid@Node1 ]$ /u01/app/19.0.0.0/grid/gridSetup.sh -applyRU

/export/Sam/19c-Grid/31305339 -J-Doracle.install.mgmtDB=false

-J-Doracle.install.mgmtDB.CDB=false -J

Doracle.install.crs.enableRemoteGIMR=false

This step will first apply the patch and will then start the upgrade , it will

invoke the GUI for Grid upgrade.

This command will install the Patch and will start the GUI for GRID upgrade.

Select Nodes "Do not select -

Skip upgrade on unreachable nodes and " Click "Next"

Skip EM

Registeration Check - Do this after installation and Click

"Next"

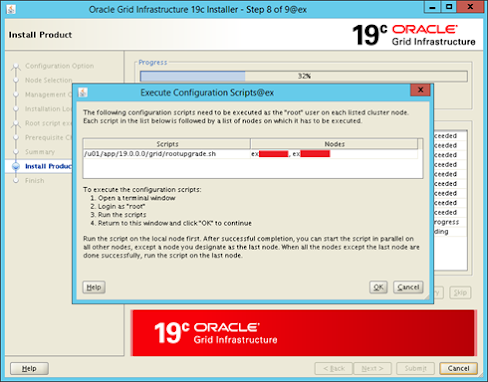

Rootupgrade.sh needs to be executed node by node .

NOTE:

After rootupgrade.sh completes successfully on the local node, you can run the

script in parallel on other nodes except for the last node. When the

script has completed successfully on all the nodes except the last node, run

the script on the last node.

Do

not run rootupgrade.sh on the last node until the script has run successfully

on all other nodes.

NODE1 : Stop all the database and ACFS mounts in all the

nodes.

[root@Node1]#

/u01/app/19.0.0.0/grid/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.0.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

..

…..

………

2020/10/03 14:00:57 CLSRSC-474: Initiating upgrade of resource types

2020/10/03 14:02:09 CLSRSC-475: Upgrade of resource types successfully

initiated.

2020/10/03 14:02:24 CLSRSC-595: Executing upgrade step 18 of 18: 'PostUpgrade'.

2020/10/03 14:02:34 CLSRSC-325: Configure Oracle Grid Infrastructure for a

Cluster ... succeeded

------------------------------------------------------------------------------------

NODE2 : Stop all the database and ACFS mounts in all the

nodes.

[root@Node2

~]# /u01/app/19.0.0.0/grid/rootupgrade.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME=

/u01/app/19.0.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The file "oraenv" already exists in /usr/local/bin. Overwrite

it? (y/n)

[n]: y

..

…..

…….

2020/10/03 14:45:04 CLSRSC-476: Finishing upgrade of resource types

2020/10/03 14:45:18 CLSRSC-477: Successfully completed upgrade of resource

types

2020/10/03 14:45:45 CLSRSC-595: Executing upgrade step 18 of 18: 'PostUpgrade'.

Successfully

updated XAG resources.

2020/10/03 15:11:21 CLSRSC-325: Configure Oracle Grid Infrastructure for a

Cluster ... succeeded

----------------------------------------------------------------------------------

NODE3 : Stop all the database and ACFS mounts in all the

nodes.

Once Node 2

completes, run rootupgrade on node 3

-------------------------------------------------------------------------------------

NODE4 : Stop all the database and ACFS mounts in all the

nodes.

Once node 3 also

completes, run the rootupgrade on Node 4

-------------------------------------------------------------------------------------

Once rootupgrade.sh has been run in all the nodes, Continue with Graphical Installation and press OK.

14) Once the upgrade completes, check if the ASM is up and running in all the nodes and also Perform an extra check on the status of the Grid Infrastructure post upgrade by executing the following command from one of the compute nodes:

[root@Node1

~]# /u01/app/19.0.0.0/grid/bin/crsctl check cluster -all

************************************************************

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

[root@Node2 ~]# node-2:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

[root@Node2 ~]# /u01/app/19.0.0.0/grid/bin/crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [19.0.0.0.0]

15) Check the lspatches

[oracle@Node101 ~]$ $ORACLE_HOME/OPatch/opatch lspatches

31228670;REBALANCE DISK RESYNC CAUSING LOST WRITE ORA-00600 [KDSGRP1] OR

ORA-01172 ON CRASH RECOVERY

31281355;Database Release Update : 19.8.0.0.200714 (31281355)

31335188;TOMCAT RELEASE UPDATE 19.0.0.0.0 (31335188)

31305087;OCW RELEASE UPDATE 19.8.0.0.0 (31305087)

31304218;ACFS RELEASE UPDATE 19.8.0.0.0 (31304218)

16) COMPATIBLITY parameter (set after 1 week)

WAIT

FOR 1 WEEK FOR THIS STEP

---------------------------------------

Please wait

for 1 week and then you can check and set the COMPATIBILITY parameter in all

the diskgroups to allow to use new features.

. oraenv

+ASM1

SELECT name AS diskgroup, substr(compatibility,1,12) AS asm_compat,

substr(database_compatibility,1,12) AS db_compat FROM V$ASM_DISKGROUP;

DISKGROUP

ASM_COMPAT DB_COMPAT

------------------------------ ------------ ------------

DATAC1

18.0.0.0.0 11.2.0.4.0

RECOC1

18.0.0.0.0 11.2.0.4.0

ALTER

DISKGROUP DATAC1 SET ATTRIBUTE 'compatible.asm' = 19.3.0.0.0' ;

ALTER DISKGROUP RECOC1 SET ATTRIBUTE 'compatible.asm' = 19.3.0.0.0' ;

SELECT name

AS diskgroup, substr(compatibility,1,12) AS asm_compat,

substr(database_compatibility,1,12) AS db_compat FROM V$ASM_DISKGROUP; DISKGROUP

ASM_COMPAT

DB_COMPAT

------------------------------ ------------

------------

DATAC1

19.3.0.0.0

11.2.0.4.0 RECOC1

19.3.0.0.0 11.2.0.4.0

17) Check and set Flex ASM Cardinality is set to

"ALL"

Note

: Starting release 12.2 ASM

will be configured as "Flex ASM". By default Flex ASM cardinality is

set to 3.

This

means configurations with four or more database nodes in the cluster might only

see ASM instances on three nodes. Nodes without an ASM instance running on

it will use an ASM instance on a remote node within the cluster. Only when

the cardinality is set to “ALL”, ASM will bring up the additional instances

required to fulfill the cardinality setting.

on NODE

1

. oraenv

+ASM1

srvctl config asm

output shoud

have

ASM instance count: ALL ( You should see this) and IF Not set it.

srvctl modify asm -count ALL

18) Perform Inventory update

An

inventory update is required to the 19c Grid Home because in 19c the cluster

node names are not registered in the inventory. Older database version tools

relied on node names from inventory.

Please

run the following command on the local node when using earlier releases of

database with 19c GI.

Run

this step on every node.

Node1

(grid)$

/u01/app/19.0.0.0/grid/oui/bin/runInstaller -nowait -waitforcompletion

-ignoreSysPrereqs -updateNodeList ORACLE_HOME=/u01/app/19.0.0.0/grid

"CLUSTER_NODES={node1,node2,node3,node4}" CRS=true

LOCAL_NODE=local_node

Node2

(grid)$

/u01/app/19.0.0.0/grid/oui/bin/runInstaller -nowait -waitforcompletion

-ignoreSysPrereqs -updateNodeList ORACLE_HOME=/u01/app/19.0.0.0/grid

"CLUSTER_NODES={node1,node2,node3,node4}" CRS=true LOCAL_NODE=local_node

Node3

(grid)$

/u01/app/19.0.0.0/grid/oui/bin/runInstaller -nowait -waitforcompletion

-ignoreSysPrereqs -updateNodeList ORACLE_HOME=/u01/app/19.0.0.0/grid

"CLUSTER_NODES={node1,node2,node3,node4}" CRS=true

LOCAL_NODE=local_node

Node4

(grid)$

/u01/app/19.0.0.0/grid/oui/bin/runInstaller -nowait -waitforcompletion

-ignoreSysPrereqs -updateNodeList ORACLE_HOME=/u01/app/19.0.0.0/grid

"CLUSTER_NODES={node1,node2,node3,node4}" CRS=true

LOCAL_NODE=local_node

19) Disable Diagsnap for Exadata

Due

to Bugs 24900613, 25785073 and 25810099, Diagsnap should be disabled for

Exadata.

(grid)$ cd

/u01/app/19.0.0.0/grid/bin

(grid)$

./oclumon manage -disable diagsnap

20) Edit the oratab to include the 19c for GRID HOME

21) Remove the blackouts

22) Start the listener and all the databases

********************************************************** --------

END OF UPGRADE --------------**********************************************************

nicely documented step by step.

ReplyDeletethanks for sharing.